- H4CKER BY NATHAN BINFORD

- Posts

- THIS WEEK: AI CONTENT ISN'T WRITTEN, IT'S ENGINEERED

THIS WEEK: AI CONTENT ISN'T WRITTEN, IT'S ENGINEERED

It's easier to get good code out of LLMs than good content. Find out what it takes to generate human-sounding content from a one-shot prompt.

🌟 Editor's Note

Thanks for reading H4CKER, where I share my secret sauce -my best tips and tricks- for free, along with videos on the prompts, automations, and vibe coded apps I’m building and using in my AI/marketing agency. Like something? Tell me. Don’t like something? Tell me that too!

Want more leads? I can help.

Let’s talk about how AI and automation can help grow your business.

😖 Content Prompts Are Harder Than Coding Prompts

You can get some really impressive code out of LLMs with a fairly minimal amount of effort. But if you want to generate content that’s worth reading, it’s going to take a LOT of effort, and prompt engineering finesse.

Increasingly, writing good prompts is kind of like writing code. It’s in English, of course, but really effective prompts require structure, organization, planning, and refinement.

They’re not just half-thought through, stream-of-consciousness babble.

The conversational approach works when you’re self-educating with AI, but really doesn’t work when you’re building (coding) or generating content (writing).

AI speaks and thinks in code. It intuitively (in a manner of speaking) understands programmatic logic. It’s “easy” for GPT-5, Claude 4, etc to generate good code.

And, as you’d expect from the name, large language models understand human writing very well; but they still struggle with producing writing that feels human.

And that makes sense because AI code doesn’t look much like human code either. But, we don’t notice its foreign-feeling-nature in the same way that AI writing triggers the senses (STRANGER DANGER).

They key to getting really good results out of your content prompts is less about the prompting framework and more about detail and specificity. And it’s about learning how to talk about language and writing in terms the AI understands natively.

👷 To Build A Good Prompt, You Need A Strong Foundation

Prompting content is all about structure and patterns. Good content prompts tend to be long and very detailed. Structure helps the AI make sense of all the individual instructions as part of a bigger picture.

The individual instructions though, are all about targeting specific patterns that you do or don’t want the AI to use when generating your content.

First let’s lay the groundwork with a solid foundation for your content prompt.

Your prompt should contain a number of variables and sections.

Variables set important data points in a reusable way Sections which are clearly laid out and easy for the AI to understand.

These are the important variables and sections in my content prompts:

Variables

Author: Who is the AI “ghostwriting” for?

Audience: Who is the article targeting as readers?

Goal: What do you want this content to accomplish?

Reading Level: How complicated should the writing be?

Voice: 1st / 2nd / 3rd person active / passive voice?

Topic: Which search query are you optimizing for?

Blog: What website are you writing for?

Sections

Meta Information: Variables and non-content instructions.

Rules: Positive and negative instruction for generating content.

Writing Style: AI assessment of a sample for style.

Content Language: AI assessment of language in a sample.

Content Tone: AI assessment of tone in a sample.

Formatting: Instructions specific to the formatting of output.

CTA: The location and format of the call-to-action.

Sample: Example (few paragraphs) of content to emulate.

Data: Research data provided to prevent hallucination.

Acceptance Condition: Reinforcement section for essentials.

Fill in each of these thoroughly, packing as much detail into every instruction as possible, and your content will improve 10x (if not 100x).

But, the devil’s in the details…so I’m also going to show you a sample of what the writing instructions (rules, style, language, tone, formatting) look like in a well-engineered prompt.

⌚ Take Your Time With The Details

As I covered in the featured video in last week’s email, Content Prompt Engineering With GPT-5 Thinking, I’ve gotten great results from working with GPT-5 to design and optimize the prompts I use with it and other LLMs.

That video goes into very specific detail on how to use AI to generate writing instructions for your content prompts, so I strongly advise you to watch it, if you haven’t already.

Here’s a sample from one of my current content prompts:

(In Rules section)

Do not use em dashes, ever. For asides or callouts, use parentheses. Otherwise use semi-colons.

Ex: (BAD) “Every decision—colors, fonts, layout, photos—plays a role in how diners experience your restaurant.”

Ex: (GOOD) “Every decision (colors, fonts, layout, photos) plays a role in how diners experience your restaurant”

(In Writing Style section)

First paragraph = 2–3 short sentences. No stats. Sentence 2 states the reader benefit. Treat as a hook.

After the first 2–3 hook sentences, include one plain-language “stakes” sentence that provides contrast (e.g., “…or it can make you look closed or careless.”).

(In Content Language section)

Highlight trends and shifts in the industry with a sense of immediacy and relevance to the target audience.

Narrative style that combines storytelling with clear step-by-step strategies.

Mixes detailed instructions with personal anecdotes to maintain both clarity and relatability.

Employs parenthetical asides to keep clarity in sentences or to allow levity / humor.

This is just a tiny selection of my writing instructions.

This prompt is over 1,500 words long (the average length of an article), not counting the research data or writing sample, so I can’t stress enough that good writing prompts come from hours of grinding through the prompt engineering process described in the video I linked above.

It it was easy everyone would be doing it.

⚙️ The Laboratory: Prompts & Automations

Steal this prompt to generate relevant images for your articles and blog posts automatically, using GPT-5 and (I recommend) Flux Pro Ultra. Watch as I craft a highly effective image generation prompt and demonstrate its results.

Here’s what I show in the video:

✅ How To Generate Image Prompts From Content : Just feed your article content into the prompt and it automatically generates relevant image prompts.

✅ How To Set Variables In Prompts : Learn this super useful prompting hack: set variables at the top of your prompts and reuse them throughout.

✅ How To Use Replicate.com To Access Flux : Flux is a fast, cheap, ultra-realistic AI image generator that shines in this workflow.

Streamline and scale with business automation and AI -schedule a free audit today!

🚀 AI / Marketing News

AI Tested In Dogfight Scenarios For The First Time

I get the appeal (and potential necessity) of this, but there will definitely be human lives sacrificed mechanically for “the greater good”.

Source: FoxNews

Takeaways:

Raft AI’s Starsage system was tested with F-16s, F/A-18s, and F-35s

Starsage acted as an AI 'air battle manager' replacing human direction

The system provided real-time threat assessments and recommendations

AI made tactical decisions in seconds vs minutes with 1:1 pilot support

Starsage analyzed and identified enemy group formations

Raft AI might have prevented the recent military-airliner near-collision

A human-over-the-loop model was preserved so pilots could override

The Pentagon just handed the AI battle manager its wings, and it didn't crash and burn.

The Starsage system is no gimmick; it shaved critical minutes off decision loops and gives pilots their own autonomous strategist in the sky.

That’s undoubtably impressive. The question just remains to be, how much should we delegate our decision-making to non-human operators (and the stakes are rising).

Anthropic Drops Chrome-Savvy AI Agent: Claude Can Now Take The Wheel

Claude recently launched a Chrome Extension to enable browser use with Claude; likely in response to the notable reception of Perplexity AI’s agentic browser Comet.

Source: Claude

Takeaways:

Anthropic introduces a new AI agent in a Chrome extension

This adds Claude to list of AI agents equipped to act on your behalf online

This mirrors OpenAI Operator, Google Astra, and Perplexity Comet

It performs tasks like clicking buttons, filling forms, or navigating the web

Security and privacy remain unclear, raising concerns

The feature is still in roll-out / testing phases

My Take:

Anthropic is finally playing catch-up by dropping a Claude-powered agent that can do things in your browser, instead of just describing them.

This is a major development for Claude users: it can click, scroll, and fill out forms like your own personal assistant.

I don’t think this will have the same impact as Perplexity but it shows that the AI agent wars are heating up.

Google Rolls Out August 2025 Spam Update (And No One Cares)

While Google’s dominance in the search world hasn’t changed much (yet), their approach to search could not be more different than prior years. Everything is AI now, so fighting spam and delivering quality results have just become hand-wringing and propaganda exercises -and nothing more.

This is the blackhat’s time to shine…

Source: SearchEngineLand

Takeaways:

Google released its Aug 2025 spam update; the first since Dec 2024

Described as a 'normal' update, it will affect all languages and regions

No specific type of spam targeted was identified

It’s the first (official) algorithm shift since the June 2025 core update

My Take:

Another day, another Google update set to shake your SERP trees. While Mountain View calls it a 'normal' spam update, the clarity around what exactly is being targeted means its probably neutered and not much to worry about.

Typical advice applies: Wait a few weeks to, run tests, don’t react too soon.

A toothless spam update would be a bigger signal than one that actually did something.

Eyes peeled…

Researchers Are Already Fleeing Meta’s AI Superintelligence Lab

This is hardly surprising. Even with “Zuck You” money, Meta lacks the soul (and talent) to create a meaningful AI platform. We shouldn’t be concerned. They would be the ones to unleash Skynet accidentally anyway.

Source: Wired

Takeaways:

Meta’s SI Lab launched in ‘24 under DeepMind OG Yann LeCun.

The lab's aim: build 'autonomous systems that can reason / plan / reflect

5+ key researchers have left to competitors like Google and OpenAI

Insiders report a lack of clarity and internal cohesion about the lab’s focus

Yann LeCun’s open skepticism RL and LLMs clashes with industry realities

Originally an AI research group, Meta’s aggressive pivots have destabilized it

Despite massive investments, Meta appears to be battling an AI brain drain

My Take:

Meta tried to flex its AGI ambitions by launching a fancy new AI superlab with LeCun at the helm, but the top talent is already ghosting them.

It's a classic case of 'big company whiplash'. The team lacks focus and LeCun’s anti-hype, open research doctrine isn’t exactly helping them in an industry fueled by excitement around LLMs.

Honestly, the ones jumping ship may be the smart ones.

For anyone watching the AI arms race, this is a reminder that throwing money and mission statements around isn't enough to hold onto top talent.

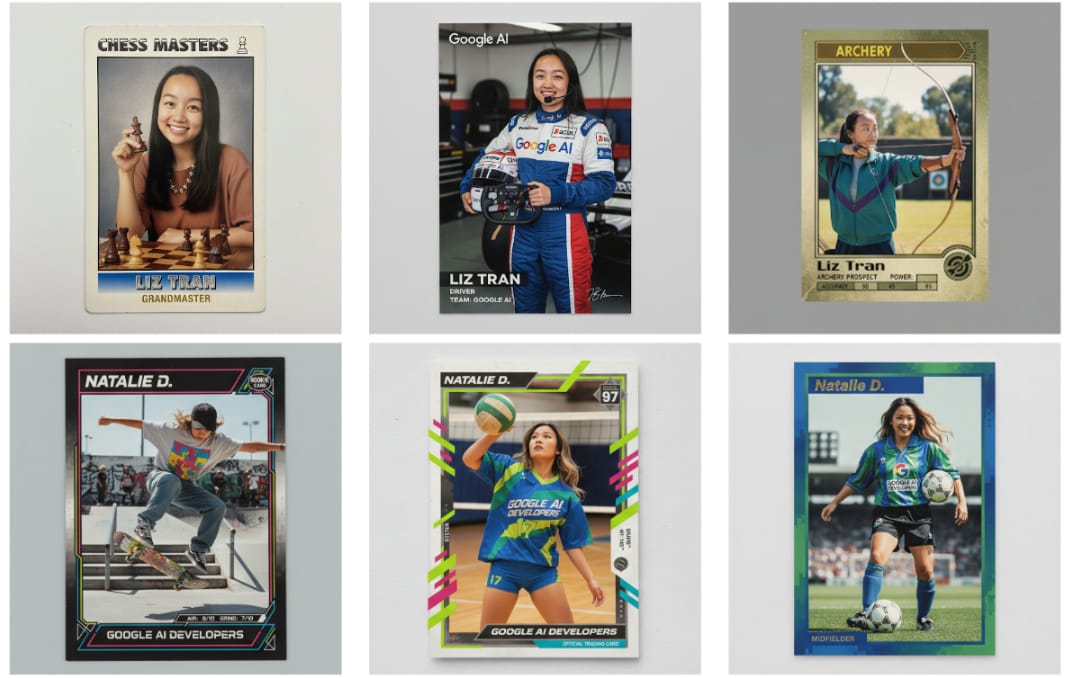

Google Drops Gemini 2.5 Flash Image (aka nano-banana)

Consistency (especially of the subject of the images -the character) between images / scenes has been one of the biggest challenges for generative AI.

Apparently, Google has cracked this nut with their latest image generation model, first secretly released as “nano-banana”, is now publicly available as Gemini 2.5 Flash Image.

Source: Google

Takeaways:

It’s G's latest multimodal image generation / editing model

Has character consistency, template adherence, and multi-image fusion

Enables precise image editing

Built atop Gemini’s world knowledge, it handles useful real-world tasks

Accessible via Google AI Studio, Vertex AI, and Gemini API

$0.039 per image (~1290 output tokens).

Includes free remixable app templates for fast prototyping

Model outputs are watermarked with SynthID to indicate AI generation

Integrated into OpenRouter and fal.ai for developer access.

My Take:

Google’s Gemini 2.5 Flash Image just rolled out and, no lie, this thing is a serious contender despite the silly banana suit (nano-banana).

It’s cheap, fast, remixable, and shockingly accurate across use cases real marketers and product folks actually care about: dynamic product images, consistent brand characters, employee ID systems, and social-ready edits (sans Photoshop).

The integration with AI Studio makes it stupid simple to build your own tools or swap elements between scenes (using only natural language).

And since it's priced at 4 cents per image, even bootstrapped brands can benefit from its serious creative firepower without wasting resources.

Nathan Binford

AI & Marketing Strategist

I hope you enjoyed this newsletter. Please, tell me what you like and what you don’t, and how to make this newsletter more valuable to you.

And if you need help with AI, marketing, or automation, grab time on my calendar for a quick chat and I’ll do my best to help!